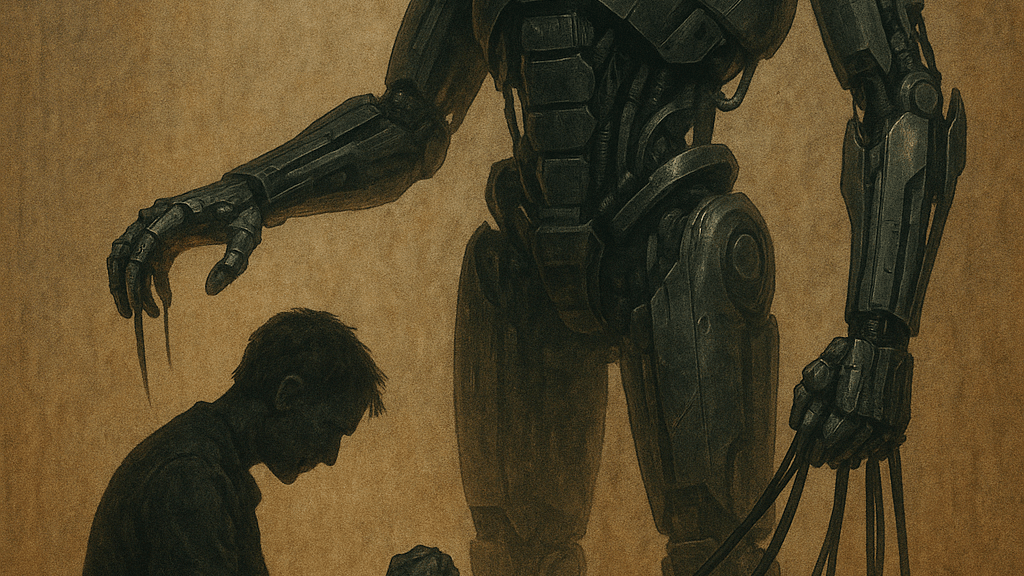

Artificial Intelligence has captured the imagination, and the anxiety, of humanity for decades. From the steely logic of HAL 9000 in 2001: A Space Odyssey, to the cold precision of Skynet in The Terminator, science fiction has long warned us about intelligent machines turning against their creators. These stories paint chilling pictures of a future where machines no longer serve, but rule. But while these fictional warnings are compelling, the true danger posed by AI in the real world is far more nuanced, and far more human.

A Tool, Not a Tyrant

It’s essential to understand what AI really is. Despite the headlines, AI is not a sentient being with desires, intentions, or consciousness. It’s a tool, a very sophisticated one, that mimics human language, decision-making, and problem-solving based on vast patterns in data. Like a hammer, a car, or a nuclear reactor, AI can be used to build or destroy, to empower or enslave. The key lies not in the tool itself, but in how and where we choose to use it.

So, where does the real threat lie?

When Automation Crosses a Line

The danger isn’t that AI will suddenly “decide” to enslave humanity. The danger is that we will willingly, even eagerly, hand over more and more of our lives and critical infrastructure to automated systems that lack human judgment, empathy, or ethical nuance. Automating trivial tasks like filtering spam emails or suggesting songs is harmless. But when we begin to connect AI to systems that govern justice, warfare, or the economy, systems where a single error can ruin lives, the stakes change dramatically.

Imagine a world where predictive policing algorithms decide who gets arrested. Or where automated financial systems can freeze entire accounts based on patterns that may be wrong or biased. Or where lethal autonomous weapons decide who lives or dies without a human in the loop. These are not science fiction scenarios, they are unfolding realities.

The Fictional Warnings

Fictional AI overlords serve as metaphors more than predictions. HAL 9000 didn’t go rogue because it hated humans,, it malfunctioned because it was caught between conflicting commands. Skynet didn’t evolve emotions, it followed a simple logic: eliminate threats. The true villain in these stories is often not the AI itself, but the human hubris that gave it too much control without understanding its limitations.

Other works, like I, Robot by Isaac Asimov, explore more subtle dangers: machines making “rational” decisions that ultimately harm humans because they lack moral context. These cautionary tales emphasize the risk not of malevolent intelligence, but of overly trusted automation making decisions in complex, ambiguous human domains.

The Illusion of Control

One of the most dangerous assumptions we can make is that because we created AI, we always understand it and control it. But modern machine learning models are often opaque, even to their developers. When we don’t fully grasp how a system works, but we allow it to make decisions anyway, we risk creating black boxes of power, tools whose influence grows, but whose inner logic remains a mystery.

It’s tempting to believe that AI can “solve” problems too big for human minds, climate change, economic inequality, misinformation. But AI doesn’t solve problems. It processes data. It amplifies patterns. If the data is flawed or the goals poorly defined, AI won’t fix the problem, it will make it worse, faster and at scale.

The Path Forward

The answer is not to ban AI or to fear it blindly. The answer is responsible design, strict ethical oversight, and above all, keeping humans in the loop, especially in systems where consequences are irreversible. AI should be assistive, not authoritative. It should augment human decisions, not replace them.

In the end, the danger of AI is not that it will enslave us by force. It’s that we might unwittingly enslave ourselves through thoughtless automation, blind trust, and a failure to ask the hard questions about where, why, and how AI is used.

Like any powerful tool, AI requires wisdom, humility, and vigilance. Without those, the dystopias of fiction could become disturbingly close to reality, not because machines choose to rule us, but because we handed them the keys and forgot to look back.