In the last few years, the boundaries of where and how we play PC games have been rapidly dissolving. The Steam Deck proved that you don’t need a desktop tower to enjoy your favorite Steam library and now, projects like Proton and FEX are taking that idea one step further, bringing Windows gaming even to ARM-based Android devices.

Steam’s Breakthrough: Running Windows Games on Linux

When Valve launched the Steam Deck, it didn’t just create a new handheld console, it introduced a paradigm shift. The Steam Deck runs SteamOS, a Linux-based operating system. But since most PC games are designed for Windows, Valve needed a way to make them compatible without asking every developer to port their game to Linux.

The solution came in the form of an API translation layer called Proton (often confused with “Photon”), a compatibility tool built on top of Wine and DXVK.

Here’s how it works:

- Windows games use Microsoft’s DirectX graphics APIs.

- Linux, however, uses Vulkan or OpenGL.

- Proton intercepts DirectX calls and translates them in real time to Vulkan, a low-overhead, cross-platform graphics API that runs natively on Linux.

The result is astonishingly efficient. Because the Steam Deck uses the same CPU architecture (x86-64) as a Windows PC, no instruction-level translation is required, only the API layer. That’s why games can run almost at native speeds, with some titles even performing better on SteamOS than on Windows.

Going One Step Further: Translating x86 to ARM

But what if we wanted to go beyond Linux on x86, say, to run those same games on an ARM-based platform like Android?

That’s where FEX (Fast Emulation eXtension) and similar technologies come in. FEX acts as a CPU translation layer, dynamically converting x86 instructions into ARM instructions on the fly.

This is a much harder problem than translating graphics APIs, because:

- The CPU instruction sets are completely different.

- Code has to be re-interpreted or re-compiled as it runs.

- Performance depends heavily on how efficiently this translation is done.

However, ARM processors, like those in modern smartphones or Apple Silicon Macs, have become so fast and efficient that real-time x86-to-ARM translation is now practical for many use cases, including gaming.

When you combine Proton (API translation) with FEX (architecture translation), you essentially get a full Windows-compatibility stack that can run on ARM Linux or even Android. This means the dream of playing Windows PC games on your phone is no longer science fiction, it’s already here.

Windows Games on Android: Winlator and GameHub

Projects like Winlator are making this vision a reality. Winlator is an Android app that bundles the entire translation pipeline, Wine, Proton-like layers, and FEX-style CPU emulation, inside a mobile-friendly interface. With it, you can install and run many Windows games right on your phone or tablet.

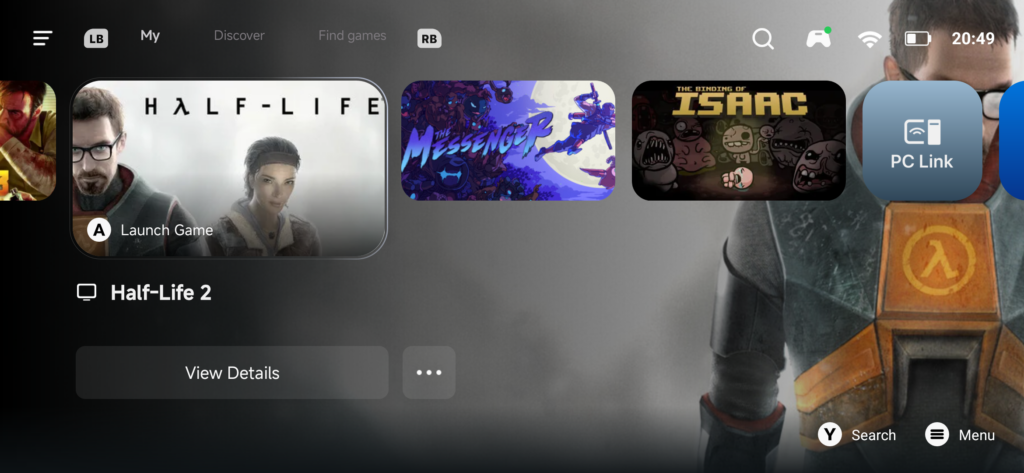

Similarly, GameHub acts as a launcher that connects to your Steam account, letting you browse and launch your library through the compatibility stack. It’s not as seamless as on the Steam Deck yet, but it’s getting there rapidly thanks to the open-source community.

The only real limitation now is hardware performance. While flagship mobile SoCs like Qualcomm’s Snapdragon 8 Gen 3 or Apple’s M-series chips are incredibly powerful, they still can’t match the sustained throughput of a dedicated gaming PC. But here’s the catch, they don’t need to.

A large percentage of Steam’s catalog consists of indie and mid-range games that don’t require cutting-edge graphics or massive CPU budgets. Titles built in Unity, Godot, or older Unreal versions often run beautifully under these emulation layers.

In other words, you might not be playing Cyberpunk 2077 on your phone anytime soon, but Celeste, Hollow Knight, Stardew Valley, Portal or Dead Cells? Those are already well within reach.

The Road Ahead

The combination of Proton and FEX-style emulation represents the next frontier in platform compatibility. As mobile hardware continues to evolve, and as open-source developers refine these translation layers, we’re heading toward a future where your entire Steam library might truly follow you anywhere, no ports, no re-purchases, no compromises.

The Steam Deck proved that PC gaming can leave the desk. Proton, FEX, and projects like Winlator are proving it can leave the house entirely.