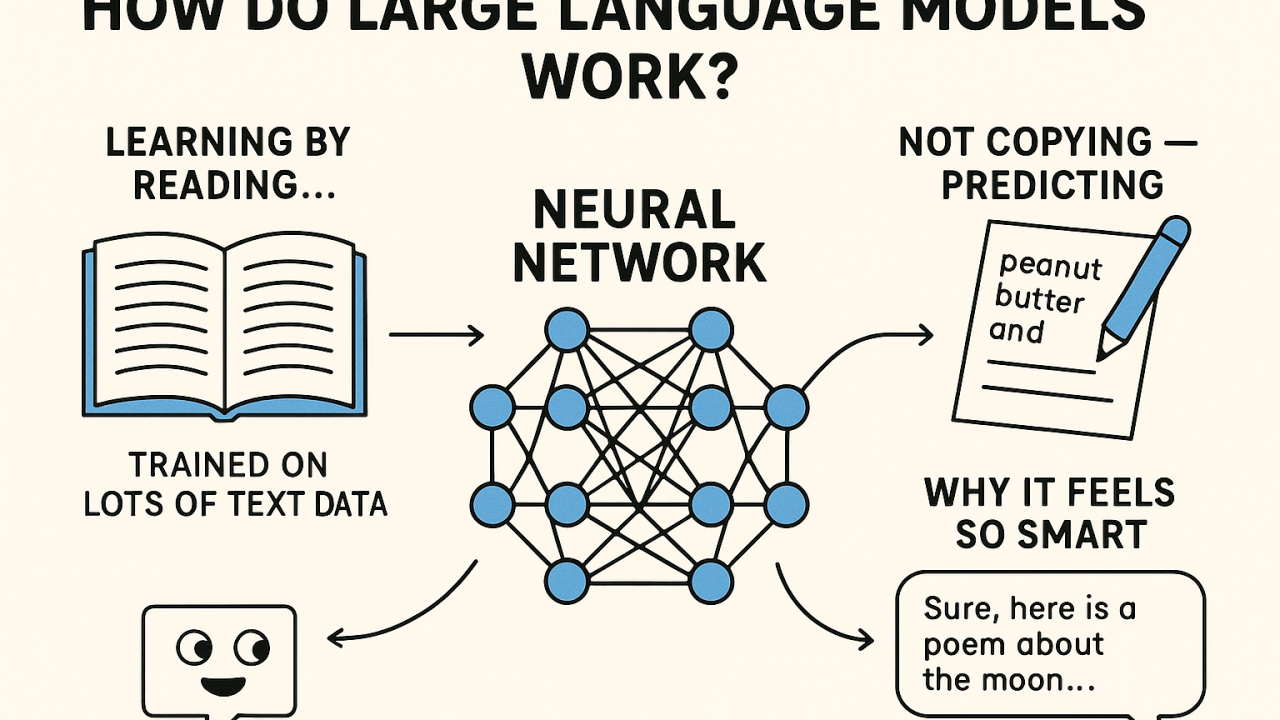

Large Language Models (LLMs) like ChatGPT might seem like magic, you type in a question or a sentence, and suddenly you get a thoughtful, often useful response. But what’s actually going on under the hood? Let’s break it down in plain language.

Learning by Reading… A Lot

Imagine trying to learn a new language by reading millions of books, articles, websites, and conversations. That’s what an LLM does during training. It reads huge amounts of text (like a super-fast speed reader) to learn how people typically use words, form sentences, and express ideas.

But here’s the catch: the model doesn’t “understand” in the way humans do. It doesn’t know facts, emotions, or what it’s like to have experiences. Instead, it gets very good at guessing what words should come next in a sentence. So if you say “peanut butter and…”, it’s likely to guess “jelly” because it has seen that combination a lot during training.

Not Copying, Predicting

LLMs don’t just memorize things word for word. Instead, they learn patterns. Think of it like how you can guess the next note in a familiar song or finish someone’s sentence because you’ve heard similar things before.

For example, if you ask it to write a poem about the moon, it doesn’t look up a moon poem from memory. Instead, it predicts one word at a time based on everything it’s learned. It’s a bit like predictive text on your phone, but on steroids.

What’s Inside the Model?

At the core of an LLM is something called a neural network, basically a very big and very complex math system inspired by how our brains work. This network has billions of little adjustable numbers called “parameters.” These parameters are tweaked during training to help the model make better predictions.

Think of it like tuning a guitar, but instead of six strings, imagine billions of tiny knobs being adjusted so the model gets better at sounding “right” when it talks.

Why It Feels So Smart

Because the model has seen so much text, it can often mimic intelligence. It can solve math problems, write stories, summarize news, or even pretend to be a pirate. But remember, it’s not thinking or understanding. It’s just generating words that are likely to follow based on patterns it learned.

Sometimes it’s eerily accurate. Other times, it makes things up (“hallucinates”) or gives wrong answers with confidence. That’s why human judgment is still important.

Final Thoughts

Large Language Models are powerful tools, kind of like calculators for language. They don’t think, feel, or know, but they can be incredibly helpful by turning what they’ve read into coherent, often useful text. They’re a mix of math, data, and prediction, and while they’re not magic, they can sure feel like it sometimes.